Running our own image processing CDN

2025-11-02

Jonathan Ho

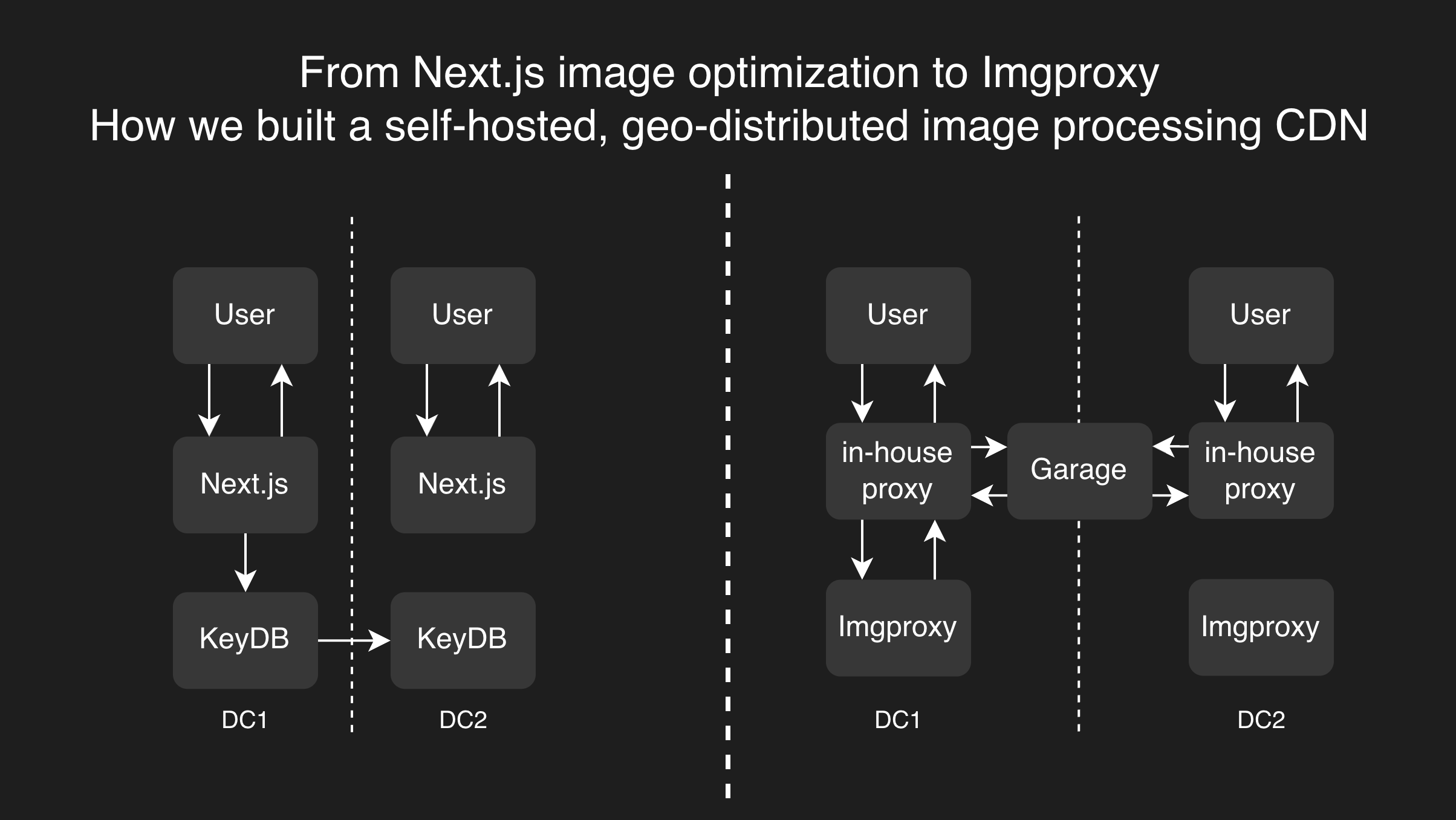

In a previous blog post, we discussed how we use KeyDB to cache optimized images for the Next.js Image component. This helps improve performance and reduce resource usage on our edge servers. However, after some time, we have some significant drawbacks and push us to rethink our image processing strategy.

Problems of Next.js + KeyDB

We face two main issues with that approach.

- Maintenance: We rely on patching Next.js's code to cache images to KeyDB. Every time there is a new version of Next.js, we need to re-create the patch (because of yarn patch), which is tedious.

- Resource Usage: KeyDB is an in-memory database, which means that the image is stored in memory – a scarce resource on our edge server. Not only that, sometimes after a server reboot, we see KeyDB have a constant high bandwidth usage, suspect it's syncing, but it never stops.

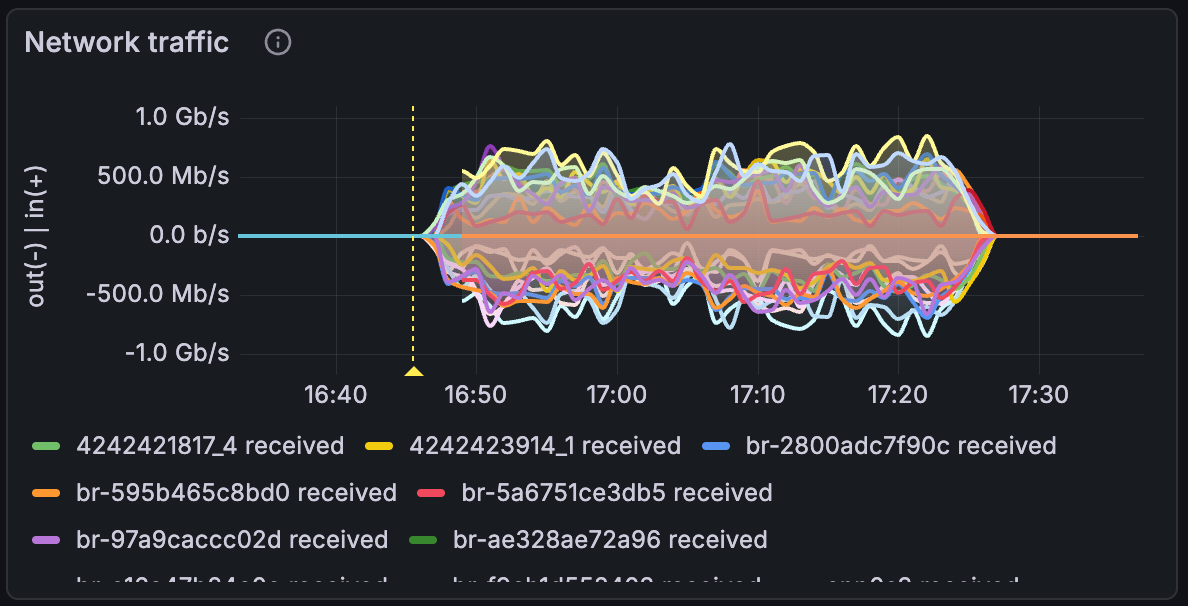

The network traffic graph above shows the bandwidth usage of all our edge servers, the yellow dash indicates the time of reboot. We can see that after reboot, the traffic spike from 2Mbps to 800Mbps, and it didn't stop until we manually restarted KeyDB.

The network traffic graph above shows the bandwidth usage of all our edge servers, the yellow dash indicates the time of reboot. We can see that after reboot, the traffic spike from 2Mbps to 800Mbps, and it didn't stop until we manually restarted KeyDB.

So we decided to ditch the whole Next.js image optimization and run our own image processing CDN in a better way.

Image processing server

First, we need to have an image processing server, there are a few popular open-source image processing software such as Imgproxy, Thumbor, Imagor. After evaluating the options, we decided to use Imgproxy, which is faster and more widely used. The setup process is pretty straightforward. Just add imgproxy to Coolify, set the environment variables, and lastly in Next.js set the image loader to use imgproxy. With the image processing in place, we can start looking for caching.

Caching

With the same goal of reducing duplicated optimization, we need to have caching for imgproxy. Unlike other alternatives, Imgproxy does not have a built-in result storage option, so we need to roll a separate proxy in front of Imgproxy.

With the goal of optimizing once and reusing it for all subsequent requests, we gather the requirements for the caching proxy as below:

- The proxy should be able to cache the optimized image and share the cache across all datacenters.

- The cache should be able to replicate across multiple datacenters.

- The cache should require no special config and be lightweight, so we can deploy it to all our edge servers.

During our research, we landed our eyes on Garage, which is an S3-compatible object storage server designed to be geo-distributed. It fulfills our requirement of having a geo-distributed cache that can replicate across multiple data centers. So we decided to use Garage as our cache storage.

With the cache storage solved, we need a caching proxy that can cache from/to S3. Unfortunately, after a brief look, there is no off-the-shelf proxy support using S3 as the cache backend (most use local in-memory or file storage for performance). That leads us to decide to code our own caching proxy.

Using Node.js with Express and @aws-sdk/client-s3, we implement a simple caching proxy with around 100 lines of code. It uses Readable stream to pipe the response from Imgproxy directly to both Garage and the user at the same time. This way, the image is downloaded from Imgproxy and uploaded to the client at the same time, without any latency from buffering or temporary file writes. This streaming design keeps the proxy lightweight and memory efficient, while ensuring minimal latency for proxying.

Result

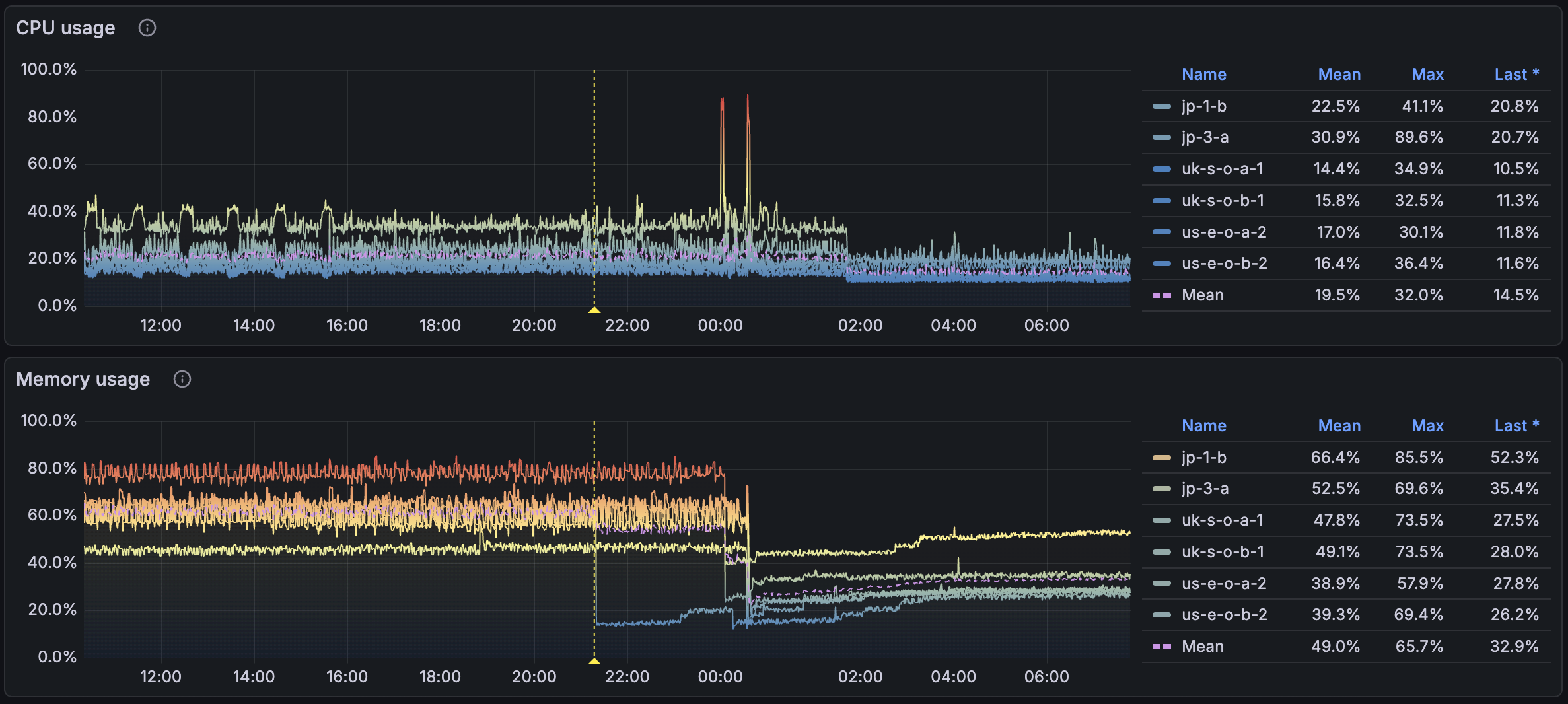

After switching to our own image processing CDN, we monitor the CPU and memory usage of our edge servers. The graph above shows the CPU and memory usage before and after the switch. We can see that the memory usage is reduced significantly, from an average of 65% to 33%. The CPU usage is also reduced by 5%. This is because we no longer need to run KeyDB, which is an in-memory database, and the hit rate is higher since Garage is read-after-write consistency vs asynchronous replication.

After switching to our own image processing CDN, we monitor the CPU and memory usage of our edge servers. The graph above shows the CPU and memory usage before and after the switch. We can see that the memory usage is reduced significantly, from an average of 65% to 33%. The CPU usage is also reduced by 5%. This is because we no longer need to run KeyDB, which is an in-memory database, and the hit rate is higher since Garage is read-after-write consistency vs asynchronous replication.

Conclusion

What started as a small performance optimization turned into a full-fledged edge image CDN — built with open-source components and minimal custom code. By combining Imgproxy for processing, Garage for distributed caching, and a lightweight Node.js proxy, we now have a scalable, maintainable, and transparent image processing system that fits perfectly into our infrastructure. In the future, we plan to move the image from Cloudinary to Garage, the main blocker is the image metadata API that Cloudinary provides, but that's a story for another day.